Good Loop – Slowing down the spread of synthetic dis-/misinformation

Team

Company | Institution

Category

Type

Project description

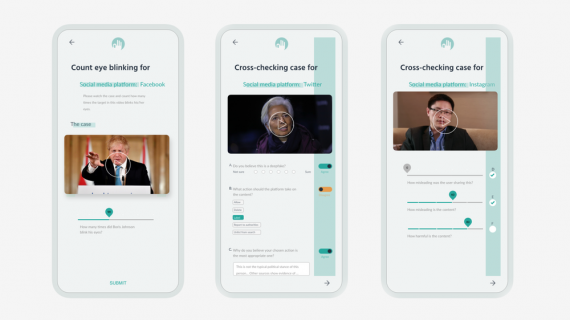

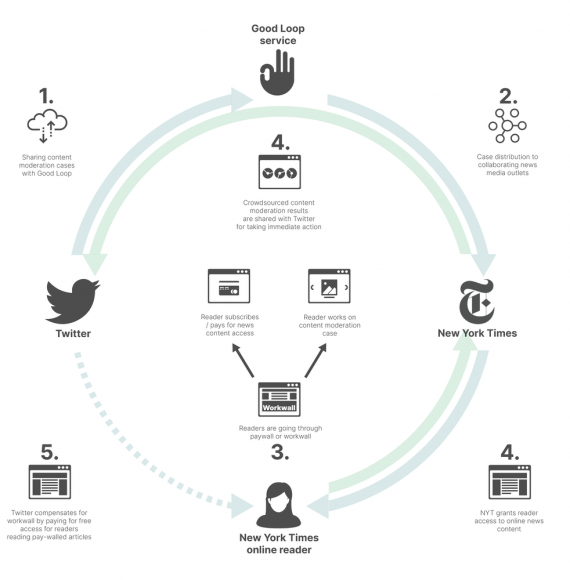

With the service provider Good-Loop, newspaper readers can connect to content moderators to help them slow down the rapid spread of online dis-/misinformation. The fake news in its present form has evolved and become more challenging to identify: Synthetic dis-/misinformation is treated the same as all other internet content, showing up in search results & social media. Without the infrastructure in place, content moderators will find it hard to distinguish between real and A.I. manipulated audio files, texts, images, & videos.

Content moderators supported by online newspaper readers

With the service provider Good-Loop, newspaper readers can connect to content moderators to help them slow down the rapid spread of online dis-/misinformation. The fake news in its present form has evolved and become more challenging to identify: Synthetic dis-/misinformation is treated the same as all other internet content, showing up in search results & social media. Without the infrastructure in place, content moderators will find it hard to distinguish between real and A.I. manipulated audio files, texts, images, & videos.

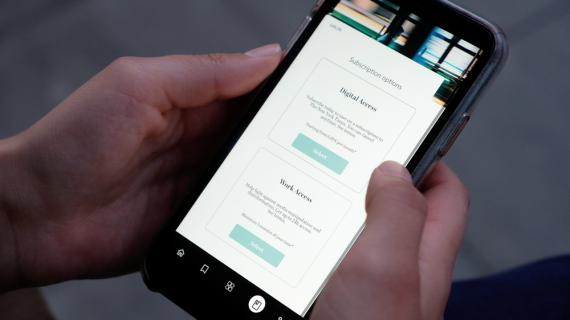

This speculative design concept lives in a world where our information environment has worsened due to the massive proliferation of dis-/misinformation and the lack of serious attempts to effectively mitigate this problem. Because of that, access to trustworthy news online has become hard to find. Via Good-Loop, online newspapers can provide different options for their readers: to pay or to work for reliable news content. This new type of crowdsourced content moderation projects a growing economy for reliable, trustworthy information in exchange for digital labor in the era of truth decay.

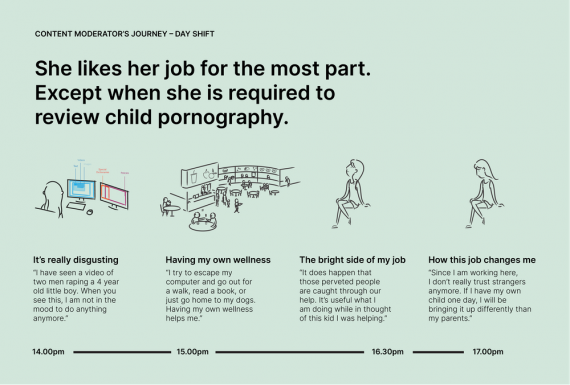

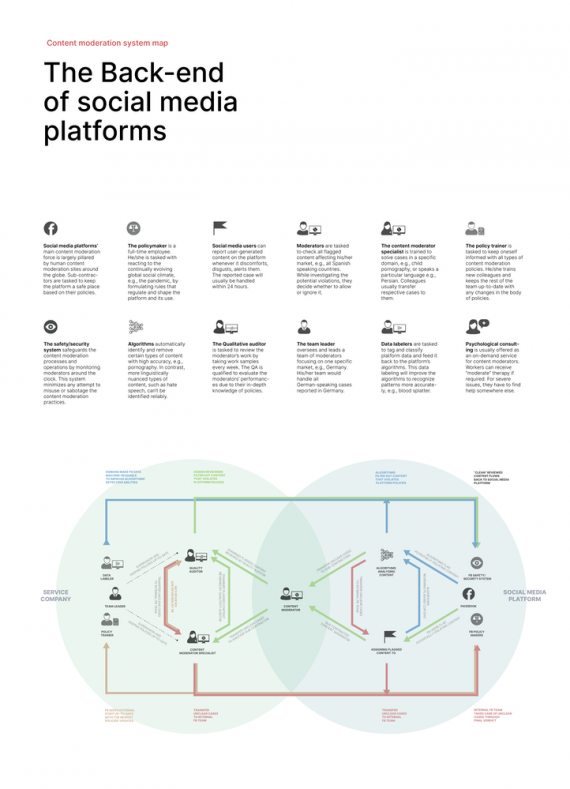

The moderator

Moderators will remain an essential part of addressing day-to-day dis-/misinformation. They act as first responders on social media platforms. They decide what should be on their platforms and what they should remove with their powerful editorial role.

Collaborative, transparent content moderation

Dis-/misinformation are a threat to public health. Good-Loop delivers greater transparency in content moderation practices by sharing content moderation cases and policies with online newspaper readers. The purpose of this collaborative symbiosis between them are:

- Highlight the critical and dangerous work done by moderators.

- Slow down the spread of synthetic dis-/misinformation.

- More accountability & transparency through collaborative efforts between news outlets and social media platforms.

Why this award

Throughout my field research, I have experienced myself how sub-contractors practice content moderation daily. And I find it so hard to believe why platforms provide them with the most user-unfriendly back-end interfaces and opaque algorithms to combat outpourings of dis-/misinformation. There is so much more left to do in this invisibly designed industry.

Content moderation practices and algorithms will remain black-boxed and eventually deteriorate free speech if we don’t do anything about this. With this award, I wish to encourage others to dig deeper into this seemingly unsolvable problem and, to dive into other dark corners of the Internet and bring light into there as well. Because design is everywhere – and it’s needed.

The main research objectives were to understand the moderator’s work environment through field research and find opportunities to support the moderator’s future work routine through participatory design. Interviews & contextual inquiries have been conducted with subcontractors of large social media platforms in 3 different countries. Synthesised research was used in a future casting workshop, in which fellow students help create future scenarios. The workshop pictured an emerging threat landscape of harmful & misleading synthetic media becoming ubiquitous in search results & social media. Based on that, concepts were designed, evaluated, and lastly, user-tested.

Content moderators supported by online newspaper readers

With the service provider Good-Loop, newspaper readers can connect to content moderators to help them slow down the rapid spread of online dis-/misinformation. The fake news in its present form has evolved and become more challenging to identify: Synthetic dis-/misinformation is treated the same as all other internet content, showing up in search results & social media. Without the infrastructure in place, content moderators will find it hard to distinguish between real and A.I. manipulated audio files, texts, images, & videos.

This speculative design concept lives in a world where our information environment has worsened due to the massive proliferation of dis-/misinformation and the lack of serious attempts to effectively mitigate this problem. Because of that, access to trustworthy news online has become hard to find. Via Good-Loop, online newspapers can provide different options for their readers: to pay or to work for reliable news content. This new type of crowdsourced content moderation projects a growing economy for reliable, trustworthy information in exchange for digital labor in the era of truth decay.

The moderator

Moderators will remain an essential part of addressing day-to-day dis-/misinformation. They act as first responders on social media platforms. They decide what should be on their platforms and what they should remove with their powerful editorial role.

Collaborative, transparent content moderation

Dis-/misinformation are a threat to public health. Good-Loop delivers greater transparency in content moderation practices by sharing content moderation cases and policies with online newspaper readers. The purpose of this collaborative symbiosis between them are:

- Highlight the critical and dangerous work done by moderators.

- Slow down the spread of synthetic dis-/misinformation.

- More accountability & transparency through collaborative efforts between news outlets and social media platforms.

Why this award

Throughout my field research, I have experienced myself how sub-contractors practice content moderation daily. And I find it so hard to believe why platforms provide them with the most user-unfriendly back-end interfaces and opaque algorithms to combat outpourings of dis-/misinformation. There is so much more left to do in this invisibly designed industry.

Content moderation practices and algorithms will remain black-boxed and eventually deteriorate free speech if we don’t do anything about this. With this award, I wish to encourage others to dig deeper into this seemingly unsolvable problem and, to dive into other dark corners of the Internet and bring light into there as well. Because design is everywhere – and it’s needed.

The main research objectives were to understand the moderator’s work environment through field research and find opportunities to support the moderator’s future work routine through participatory design. Interviews & contextual inquiries have been conducted with subcontractors of large social media platforms in 3 different countries. Synthesised research was used in a future casting workshop, in which fellow students help create future scenarios. The workshop pictured an emerging threat landscape of harmful & misleading synthetic media becoming ubiquitous in search results & social media. Based on that, concepts were designed, evaluated, and lastly, user-tested.