U-Bot (The robot that teaches orientation and mobility skills to blind children)

Team

Company | Institution

Category

Type

Project description

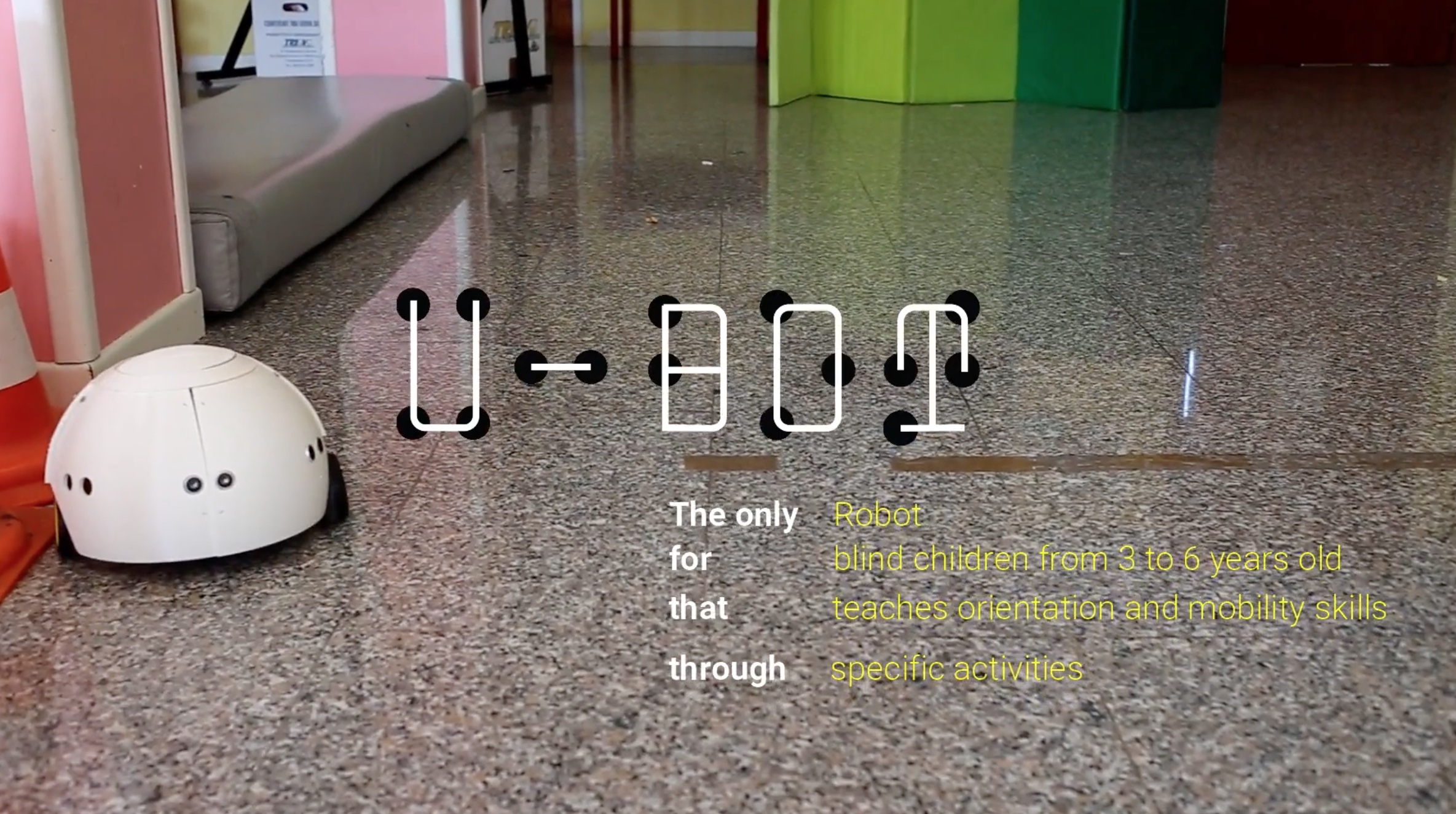

The U-Bot is a robot powered by ai behaviors and the aim is to make blind children aware of the space around them. The important phase of this project is been the research part focused on the available product on the market to help blind people have a better understanding of “orientation and mobility”.

The U-Bot is a robot powered by ai behaviors and the aim is to make blind children aware of the space around them. The important phase of this project is been the research part focused on the available product on the market to help blind people have a better understanding of “orientation and mobility”.

Technological accessibility requires elderly and young children to be inclined to learn new topics. In the visually impaired population, we have to find and provide the right sensory modality in order to inform blind children about the surrounding environment, soon after their birth. Despite the fact that early intervention is potentially much more powerful because the brain is more plastic at a young age, all the technology devices for visually impaired people that exist today have been developed for adults and is not well adapted for children. Although in the past decade significant advancement has been observed in the development of new technological solutions for locomotion, children are still far from having personalized solutions.

With those insights in mind, I decided to embrace my tutor’s idea about designing an open source solution for the healthcare. The robot is easy to make, easy to use and really effective on the modality skills for the children to learn. The external part can be 3d printed and the internal body can be milled, also the electronic works with an Arduino and 1Sheeld controller making a possible Bluetooth connection with an app provided with it. Multiple Ultrasonic sensors are attached to the external part to detect the user and avoiding obstacles.

We defined three main activities possible to play, in the first exercise the tutor can control the robot by using the app provided with it and the user by following the sound coming from the device have to understand it’s position in space and have to stop the sound by pressing the button located on top of the U-Bot.

In the second exercise, the user has to guess where is the robot by listening to the sound and try hit it with an interactive ball developing sound location and motor skills. In the third exercise, the user has to follow the robot by sound and follow the path created by a second user improving mobility.

We defined also a context scenario which could be a blind school’s center for visually impaired children learning “orientation and mobility.“

The U-Bot is a robot powered by ai behaviors and the aim is to make blind children aware of the space around them. The important phase of this project is been the research part focused on the available product on the market to help blind people have a better understanding of “orientation and mobility”.

Technological accessibility requires elderly and young children to be inclined to learn new topics. In the visually impaired population, we have to find and provide the right sensory modality in order to inform blind children about the surrounding environment, soon after their birth. Despite the fact that early intervention is potentially much more powerful because the brain is more plastic at a young age, all the technology devices for visually impaired people that exist today have been developed for adults and is not well adapted for children. Although in the past decade significant advancement has been observed in the development of new technological solutions for locomotion, children are still far from having personalized solutions.

With those insights in mind, I decided to embrace my tutor’s idea about designing an open source solution for the healthcare. The robot is easy to make, easy to use and really effective on the modality skills for the children to learn. The external part can be 3d printed and the internal body can be milled, also the electronic works with an Arduino and 1Sheeld controller making a possible Bluetooth connection with an app provided with it. Multiple Ultrasonic sensors are attached to the external part to detect the user and avoiding obstacles.

We defined three main activities possible to play, in the first exercise the tutor can control the robot by using the app provided with it and the user by following the sound coming from the device have to understand it’s position in space and have to stop the sound by pressing the button located on top of the U-Bot.

In the second exercise, the user has to guess where is the robot by listening to the sound and try hit it with an interactive ball developing sound location and motor skills. In the third exercise, the user has to follow the robot by sound and follow the path created by a second user improving mobility.

We defined also a context scenario which could be a blind school’s center for visually impaired children learning “orientation and mobility.“